Add New Nodes

# Add new nodes to xcat: # Node name 0XX is on subnet .1, Node name 1XX is on subnet .2, Node name 2XX is on subnet .3 etc. # Groups compute,ipmi are needed ALWAYS ### For supermicro\sgi\intel nodes: nodeadd n[201-202] groups=compute,ipmi,supermicro ### For Lenovo nodes: nodeadd n[065-067] groups=compute,ipmi,lenovo ### For Dell nodes: nodeadd n[303-305] groups=compute,ipmi,dell # Set the /etc/hosts file makehosts # Set the local dns makedns # Set the local dhcp makedhcp -n # Start the discover of the new nodes, change the noderange to the new nodes names nodediscoverstart noderange=n[201-202] -V # Open log journalctl -f

Next, boot up servers (push the power botten one by one, with 3 minutes break) in order to discover them.

Check that nodes are add ok to xCAT database: (see ‘journalctl -f’ output “xcat.discovery.nodediscover: n201 has been discovered” etc.

# See which nodes are discovered so far: nodediscoverls / watch -n 5 nodediscoverls # When all nodes are discovered, run: nodediscoverstop

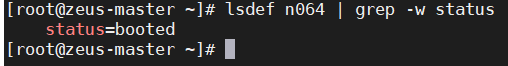

Check that you get a full output of “lsdef n201”.

You can now ssh to the new node(s)

ssh n201

# ("xCAT Genesis running on n201")

IPMI

if IPMI factory reset is needed (only in supermicro servers for now):

copy ipmicfg tool from zeus-master located at /usr/local/src to new genesis node. and run:

./IPMICFG-Linux.x86_64 -fd 2 ### RESET IPMI TO FACTORY DEFAULT if needed

Check that the IPMI “IP Address” is correct

ipmitool lan print 1 |grep "IP Address "

To run this commands across number of added hosts, use following command (this example works on nodes n021-n024):

for i in n0{21..24}; do ssh $i "/usr/bin/ipmitool lan print 1 | /usr/bin/grep 'IP Address '";done

If the address is not correct, set it by :

ipmitool lan set 1 ipsrc static ipmitool lan set 1 ipaddr 172.25.1.121 # addresses : 172.25.x.1nn (x is the subnet)

Check if IPMI is ok – username & password

Add new user if needed, with ipmitool.

## Add Administrator user to IPMI # Create user Administrator with ID 3 ipmitool user set name 3 Administrator # Set password for Administrator user ipmitool user set password 3 ZeusIPMI20 # Enable the Administrator user ipmitool user enable 3 # Set Admin privilege for Administrator user ipmitool channel setaccess 1 3 callin=on ipmi=on link=on privilege=4 # Cold reset the IPMI ipmitool mc reset cold ## wait few minuts !!! ssh Administrator@n018-bmc # In lenovo systems - will ask for password change, type the ZeusIPMI20 pass again. it will be ok. ## Also for lenovo systems: asu set BootModes.SystemBootMode "Legacy Mode" ## For HP serves set /map1/config1 oemHPE_ipmi_dcmi_overlan_enable=yes

—-

Disable Lan over USB on node on Lenovo systems:

From root homedir @ zeus-master:

onecli config set IMM.LanOverUSB "Disabled" -b Administrator:####@n087-bmc onecli config show IMM.LanOverUSB -b Administrator:####@n087-bmc # replace #### with the bmc password.

—-

Configure alias to nodes:

for HP compute is automatic.

for rest of servers need to set manual for each node:

chdef n017 nicaliases='eno1!z001' # change the node name (n017) and the alias (z001). ## AFTER finished all required nodes. run: makehosts makedns makedhcp -n

Set image to nodes & install them:

(Can replace n021 with group name like “hp” or “supermicro” or node range ie. “n[021-022]”)

If group name are called so ALL the computers in the group will affected.

So use node range when adding new nodes.

rsetboot n[021-022] net nodeset n[021-022] osimage=centos7.7-compute rpower n[021-022] reset

# Wait for nodes to boot, it’s a great time for a coffee break

———

After nodes are booted ok , you can configured them with Ansible.

# In HP nodes, in case they don’t boot after image installation, check if UEFI mode is on in the bmc web UI.

# The steps: Power off, login to the web bmc, under Administration section, boot mode, change it to Legacy BIOS, Power on.

Ansible:

cd /install/ansible # add new node to line in hosts.yaml vi hosts.yaml # RUN ON NEW NODES ONLY ansible-playbook -i hosts.yaml compute.yaml --limit n021:n022

Change home dirs:

From zeus-master as root:

xdsh n[021-022] /home/zeususer/changehomedir_vkm_users.sh xdsh n[021-022] /home/zeususer/changehomedir_bigmech_users.sh

!! THE NODES ARE NOW GOOD TO GO !!

No need when add new compute nodes

—

How to Delete nodes if needed:

nodepurge n[021-022]

—-

Run Ansible For all compute nodes:

cd /install/ansible ansible-playbook -i hosts.yaml compute.yaml

For some new node(s) & only some tag:

ansible-playbook -i hosts.yaml compute.yaml --list-tags # Will see in output the list of tags to compute nodes ansible-playbook -i hosts.yaml compute.yaml --limit 'n001' --tags nfs # One node ansible-playbook -i hosts.yaml compute.yaml --limit n044:n064 --tags pbs # Range

For all site (include infra servers):

ansible-playbook -i hosts.yaml site.yaml --list-tags ansible-playbook -i hosts.yaml site.yaml --tags nfs

———–

GPU NODES

vi /etc/default/grub # Append the following to the GRUB_CMDLINE_LINUX line: modprobe.blacklist=nouveau grub2-mkconfig -o /boot/grub2/grub.cfg # from zeus-master copy the nvidia.run file from /opt/src to the host # run the run file from the node

——–

ADD NEW NODES THAT IS ALREADY INSTALLED

# add new node that is already installed named for example: newlogin nodeadd newlogin groups=... # Next we need to set his attributes like ip etc. chdef -t node -o newlogin hosts.ip=1.1.1.1 # OR # # you can edit the table itself with "tabedit hosts" and from there is like vi # BE VERY CAREFUL WHEN YOU EDIT TABLES!! IT IS RECOMMEND TO WORK WITH CHDEF INSTEAD. # after finish to set the newnode attributes : # Set the /etc/hosts file makehosts # Set the local dns makedns # IF YOU WANT TO DELETE NODE USE nodepurge !! do not edit any files like /etc/hosts etc.